For nearly all communications on today’s internet, domain names play a crucial role in providing stable navigation anchors for accessing information in a predictable and safe manner, irrespective of where you’re located or the type of device or network connection you’re using. The underpinnings of this access are made possible by the Domain Name System (DNS), a behind the scenes system that maps human-readable mnemonic names (e.g.,www.Verisign.com) to machine-usable internet addresses (e.g., 69.58.187.40). The DNS is on the cusp of expanding profoundly in places where it’s otherwise been stable for decades and absent some explicit action may do so in a very dangerous manner.

Over the past 15 years hundreds of millions of domain names have been added to the internet’s DNS, and well over two billion (that’s Billion!) new users, some ~34 percent of the global population, have become connected. And whether they know it or not, they all use the DNS daily to navigate to their favorite websites, chat with friends, share photos and conduct business. This process is in large part made possible by the DNS root server system, which serves a critical function in the stable operation of the internet. Made up of 13 root servers operated by 12 different organizations, the root server system makes available the DNS’s root zone, which holds the lists of all domain names and addresses for the authoritative servers for all 317 existing top-level domains (TLDs), like .com, .net, .gov, .org, and .edu to name a few. Every time an internet user accesses information on the internet, their application (e.g,. a web browser) talks to their local operating system (e.g., Windows) that works to resolve the name of the computer where the information resides to an internet address in order to enable access to the information. If the internet address that maps to the name isn’t known already, the program will ask domain name servers on the internet where to find it. Those domain name servers start at the root, which is the authoritative source for the global DNS, and follow a series of delegations that proceed downward until they get the IP address that maps to the domain name they desire. Only then can the application connect and obtain the information the user desires. And this all happens in a fraction of a second.

Even though the internet has grown exponentially in the last decade, throughout this immense growth period the DNS root zone contents have been quite stable, with an average growth rate of less than 5 net new TLDs per year. This stability is largely credited to the hierarchical tree structure of the DNS, which enables decentralized (i.e., delegated) responsibility for administration and operations. Figure 1 illustrates the hierarchical allocation structure of the DNS, where nodes in the DNS tree are grouped into administrative regions called zones, and because a zone comprises only adjacent nodes, the zones also form a hierarchy (hence “hierarchical tree structure”).

As you might expect, the depth of the hierarchy of names below each TLD varies considerably. For example, certain generic TLDs (gTLDs), such as .com, where organizations commonly obtain domain name “delegations” directly from that TLD, generally fan out more than country code TLDs (ccTLDs) that heavily employ more structural organization based on things like functional categories or geopolitical boundaries (e.g., www.elections.state.ny.us).

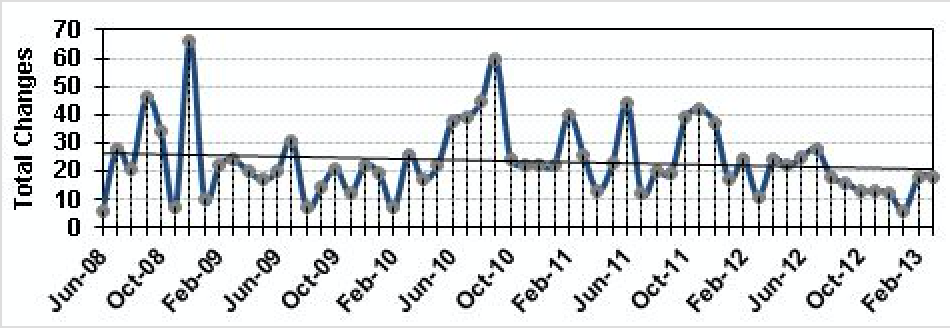

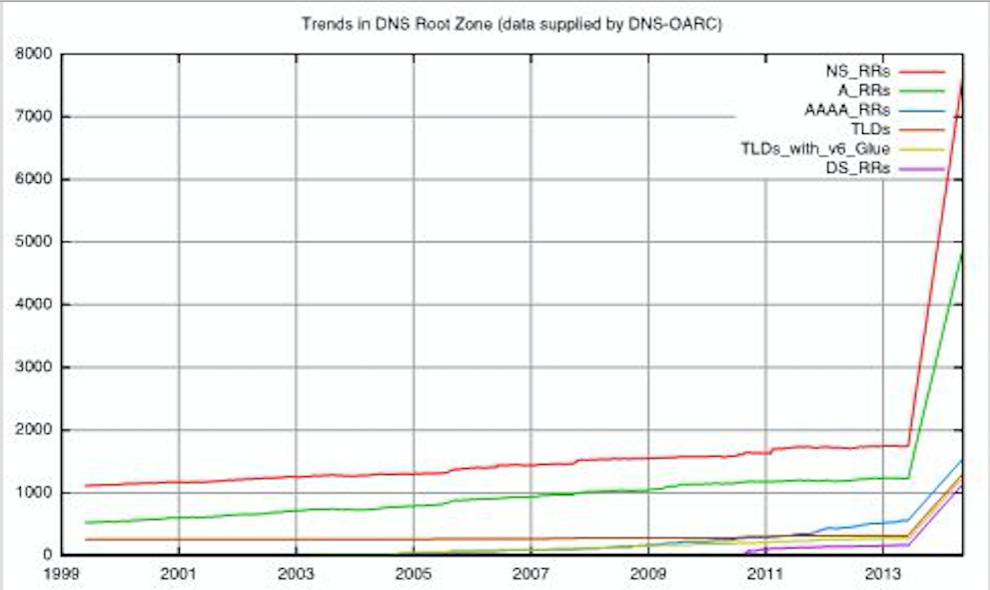

As noted above and illustrated in this DNS-OARC plot, the growth rate of the root zone has been fairly modest over the past 15 years, adding only 66 net new TLDs since 1999 (45 of which are internationalized domain names, or IDNs). As part of the Root Zone Maintainer role, Verisign maintains the authoritative database containing the root zone data for distribution to all the root servers. Twice per day, whether there have been root zone changes (e.g., new name servers, IPv6 addresses, new TLD delegations, etc.) or not, Verisign generates a new root zone file from this database and makes it available to all the root server operators. Figure 2 illustrates changes in the root zone over the past ~58 months. Between June of 2008 and March of 2013 there were 1,371 total changes (~0.8 changes/day average), adding only 37 net new TLDs.

In mid-2010 the root zone was first signed with Domain Name System Security Extensions (DNSSEC) to enable authentication and object integrity, and Delegation Signer (DS) records (used to identify and verify the DNSSEC signing key of a delegated zone) were added; arguably the most abrupt and impactful change to date. Even then, the rate of change in the root zone has been fairly modest over the past decade, although the combined impacts of the relatively recent introduction of IPv6 AAAA records, IDNs and DNSSEC records over the last couple of years do have pronounced effects when considering the resulting zone churn and aggregate size of the zone in context.

The relatively low rate of churn in the root zone and slow growth has contributed to the stability of the zone over the years. However, the proposed rate of introduction of new gTLDs (and their corresponding name server, IPv4 and IPv6 addresses, and DNSSEC records) being discussed today introduce much more volatility to the root zone and will likely increase the root zone size considerably.

Figure 3 illustrates the historical root zone growth as a baseline, from which we projected what the root zone size might look like after we add the first 1,000 new gTLD delegations as proposed by ICANN (using a simple linear model). The current tide seems to suggest that a maximum of 1,000 new gTLDs per year would be an acceptable provisioning threshold into the root once delegations begin. We used that number as a basis to estimate a rate of 20 per week of additional TLDs and simply projected the scale of the root zone contents from June 2013 out 50 weeks (leading to a total of 1,317 total TLDs and associated resource records at completion of the exercise).

As you can see, over the past 14 years the average rate of introduction on new gTLDs was ~0.12 TLDs/week (66 total). Our 20 new delegations per week is only an assumption as the mechanisms and policy framework to manage this are not clearly apparent (i.e., not available or even published) at this time. Yet, this increases the rate of growth by a factor of 174. A rough estimate after one year and 1,000 new gTLDs yields a considerable increase (in size and frequency) in nearly all these attributes of the root zone:

- Zone file size: 278KB to 4.85MB (~17x)

- Rate of new delegations: 0.12 TLDs/week to 20/week (~174x)

- Change management churn: ~0.8/day @317 TLDs to 3.32 @1317 TLDs (~4x)

While in and of itself an increase of ~1,000 delegations doesn’t represent a large zone file relative to other zones and systems in the internet, it’s certainly a marked one for the root zone itself that could have far-reaching implications – there’s a lot at risk if something breaks in the root, as everything below it (i.e., everything) could be impacted. Couple this risk with the fact that the root server system is operated by 12 different organizations with vastly different capabilities, it’s dependent upon hundreds of discrete servers globally to operate correctly, and that no holistic system exists to monitor, instrument or identify stresses or potentially at risk strings (e.g., SAC045, PayPal which is discussed in the SSR and which we’ll discuss in more detail in later blog posts) across the root server system currently, and this risk is amplified.

Now consider that the need for these root server system capabilities has been recommended time and again as a critical dependency for the introduction of new gTLDs, since at least 2009 by expressly chartered and independent expert teams, including Scaling the Root and TNO Root Scaling Study teams and ICANN’s very own Security and Stability Advisory Committee (SSAC)[ SAC042, SAC045, SAC046]. Some would argue that the stability and security of the entire new gTLD program (and existing registries) was predicated on the existence of such an early warning system and instrumentation capability; after all, nearly all navigation on the internet ultimately begins at the root. Yet, many of these recommendations to operationalize capabilities before delegations begin, in order to establish baselines and identify risks associated with currently unknown at risk strings (which cannot be gauged strictly on existing query volume alone, yet that hasn’t even been evaluated across the system), such as those described by PayPal in a recent letter to ICANN, remain unresolved.

In fairness, the holistic monitoring and instrumentation capability usually isn’t a problem, as a single operational entity typically operates a zone’s authoritative servers. However, the root is very atypical among DNS zones in that so many different organizations operate its authoritative servers. Called root operators, each of the 12 operates a single letter, with the exception of Verisign, which operates two (A and J). It would be hard to pick a more diverse set of organizations than the current set of root operators. The set contains commercial, non-profit, educational and governmental organizations, and there’s some arguable robustness and resiliency realized through this diversity.

Complicating the issue of monitoring, instrumentation and zone distribution even more, many root server operators use various techniques, including IP anycasting, to operate multiple instances of a single letter simultaneously in more than one location around the globe. More information on the root server operators and locations can be found on the Root Server Technical Operations website. Once the root zone file itself has been provisioned it needs to be distributed to the various root servers. As previously indicated, some of the root operators only operate a single server for their letter, while others operate tens or hundreds of servers globally, and often times in low bandwidth, low power, and lossy networks in developing regions. The root zone has to be distributed to all of these locations without fail.

There are also direct resolution-centric issues that need to be considered once new gTLDs are members of the root zone ecosystem. For example, we must seek to understand the impact to the dynamics of query traffic to root name servers, the efficacy of caching and other scaling techniques in broad use today, as well as anticipate the impact of unstable registry operators in a flatter, more dynamic, and more volatile Internet namespace.

However, to begin understanding the effects of a much more volatile root zone, we must first understand the current query dynamics to the root server system for each proposed new gTLD string before these rapid changes occur, we must put an early warning system in place as has been called for by all the requisite technical advisory committees to ICANN, and we must specify a careful new gTLD delegation and corresponding impact analysis capability, and we must ensure that we have a well vetted “brakes” framework codified to be able to halt changes, rollback, and recover from any impacts that the delegation of new gTLD strings may illicit.

It’s all simply good engineering that will enable the safe secure introduction of new gTLDs to the internet.