In 2010, ICANN’s Security and Stability Advisory Committee (SSAC) published SAC045, a report calling attention to particular problems that may arise should a new gTLD applicant use a string that has been seen with measurable (and meaningful) frequency in queries for resolution by the root system. The queries to which they referred involved invalid top-level domain (TLD) queries (i.e., non-delegated strings) at the root level of the domain name system (DNS), queries which elicit responses commonly referred to as Name Error, or NXDomain, responses from root name servers.

The SSAC report cited examples of strings that were queried at the root at a high frequency based on measurement and analysis by several internet researcher laboratories, including CAIDA and DNS-OARC. SSAC provided two key sets of recommendations to ICANN in section six of their 2010 report based upon their analysis and consideration of the issues. Those recommendations were as follows:

Recommendation (1): The SSAC recommends that ICANN promote a general awareness of the potential problems that may occur when a query for a TLD string that has historically resulted in a negative response begins to resolve to a new TLD. Specifically, ICANN should:

- Study invalid TLD query data at the root level of the DNS and contact hardware and software vendors to fix any programming errors that might have resulted in those invalid TLD queries. The SSAC is currently exploring one such problem as a case study, and the vendor is reviewing its software. Future efforts to contact hardware or software vendors, however, are outside SSAC’s remit. ICANN should consider what if any organization is better suited to continue this activity.

- Contact organizations that are associated with strings that are frequently queried at the root. Forewarn organizations who send many invalid queries for TLDs that are about to become valid, so they may mitigate or eliminate such queries before they induce referrals rather than NXDOMAIN responses from root servers.

- Educate users so that, eventually, private networks and individual hosts do not attempt to resolve local names via the root system of the public DNS.

Recommendation (2): The SSAC recommends that ICANN consider the following in the context of the new gTLD program.

- Prohibit the delegation of certain TLD strings. RFC 2606, “Reserved Top Level Domain Names,” currently prohibits a list of strings, including test, example, .invalid, and .localhost. ICANN should coordinate with the community to identify a more complete set of principles than the amount of traffic observed at the root as invalid queries as the basis for prohibiting the delegation of additional strings to those already identified in RFC 2606.

- Alert the applicant during the string evaluation process about the pre-existence of invalid TLD queries to the applicant’s string. ICANN should coordinate with the community to identify a threshold of traffic observed at the root as the basis for such notification.

- Define circumstances where a previously delegated string may be re-used, or prohibit the practice.

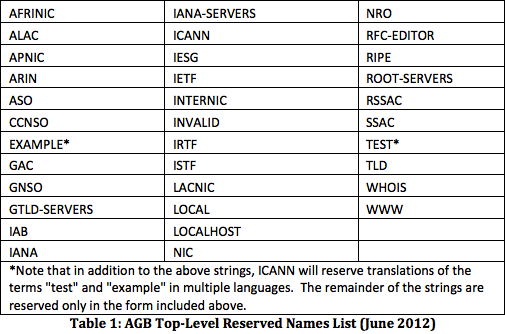

At the highest level there were two key sets of folks these recommendations were meant to protect. The first and most obvious within the ICANN community were the new gTLD applicants, the folks who would be associated in some manner with the operations of registry infrastructure supporting new gTLDs. These are most certainly the folks who will observe unanticipated system load or have to bear operational burden and other costs with what they may have otherwise considered general noise or background radiation associated with operating these new gTLD registries. In response to these recommendations, ICANN — in the new gTLD Applicant Guidebook (AGB) — did reserve a number of strings, although apparently without conducting any study of the topic. Table 1 was extracted from Section 2.2.1.2.1 Reserved Names of the AGB, and depicts the strings they prohibited as of June 2012.

If one were to assess the frequency of occurrence of these strings in queries at the root, perhaps, for example, from a “parts per million” perspective, most of these strings would likely yield negligible results. Of note is the list of reserved names in RFC 2606: .test, .example, .invalid, and .localhost (updated by RFC 6761) all of which see a reasonably large number of queries, and were included in Table 1. More importantly, there’s an absence of potentially problematic strings, such as those discussed in SAC045, and in Appendix G Private DNS Namespaces of RFC 6762.

Table 1 makes it abundantly clear to me that the recommendations regarding actual analysis of data to identify and prohibit registration of potentially problematic strings were misapplied. Instead, what we have is essentially a list of various “groups” and terms that are, either directly or loosely associated with ICANN in some manner or other. I think the mark was horribly missed here and while it may be awkward to reorient at this point, there’s really not any other option.

The second set of folks the recommendations were meant to protect were the end users, the global internet operators, the organizations, and all other stakeholders that would potentially become impacted parties. Those who should be “forewarned” about impending delegations such that they can mitigate or eliminate risks to their operations before delegations occur.

Perhaps not surprisingly, those consumers, organizations and end users, who are all relying parties of the existing global DNS to which the “forewarning” recommendation above was meant to apply, were also the targets of additional recommendations by SSAC. These recommendations include SAC046(2010) and again in SAC059 (2013), as well as preceding foundational work by Lyman Chapin and others in their ICANN-commissioned Scaling the Root study published in 2009. Of particular note, the Scaling the Root study, SAC045, SAC046, and SAC059, discuss and reiterate the need to:

- Develop a capability to instrument the root server system in order to see who is resolving what and how frequently;

- Develop capabilities across the root server system to provide an early warning system and rollback capability if things go awry; and

- Conduct “interdisciplinary studies” that assess the impact to “user communities” and forewarn them of impending new gTLD delegations before their operations are impacted.

These three recommendations all seem prudent and reasonably straightforward to me. They’d certainly all seem to be squarely within the public interest, and in the event that we’ve been unclear at this point: In addition to concern for the potential actual damage and loss to businesses and consumers, the risks and liabilities that result from the unresolved nature of these recommendations is at the very core of Verisign’s concerns with regard to the security and stability issues with the new gTLD program. We cannot unilaterally transfer these risks to consumers, most of whom don’t even know who ICANN is!

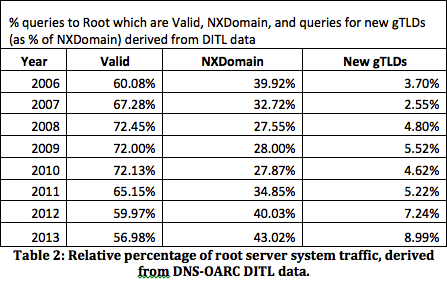

OK, off the soapbox and on to some supporting technical bits. We routinely see hundreds of millions, if not billions, of queries every single day at the root level of the DNS that result in NXDomain responses. This represents some 43 percent of all queries at the root in 2013, measured using DNS-OARC’s DITL data. Many of these NXDomain queries, anywhere from ~4 percent in 2006 to ~9 percent in 2013, are associated with applied for gTLD strings, as depicted in Table 2.

Of course, the operative terms measurable and meaningful in SAC045 provide some key guidance here. That is, how do you instrument the root server system in such a manner that you obtain accurate information across a reasonable timescale to assess the occurrence of queries across the system for a given string? And once you have that information, what other considerations need to be given to assess the level of risk associated with delegating that string?

For example, you could base such a risk assessment solely on observed query frequency and determine that .home, .corp, and a few other obvious strings are at risk. In a recent analysis from Verisign’s DNS measurement team, .corp was queried from a broad set of unique sources residing in over 8,500 discrete autonomous systems (ASNs) and trailing only .box, .cisco, and .home with the largest number of unique second level domains. Moreover, to SSAC’s point about “meaningful” measurements, query frequency alone is clearly insufficient to gauge risk as a single query from some source may provide an indicator that a system relies on the current behavior in order to function properly within its operating environment, so they all must be assessed in part, and within broader context. Examination of where queries are coming from, or perhaps the semantics of how query patterns look at the root, or an analysis of what impacts security protections, practices, and issued X.509 materials might have when exploiting unsuspecting consumers should be considered. Even looking at who may be driving any particular aspect of query streams (maybe even on a per-string basis) and what motivates clients to issue some of these queries or an evaluation of the labels themselves could be important. Query frequency data without context isn’t enough.

Heck, with a bit of elbow grease (or keyboard action) and a measurement apparatus, one could create a set of risk factors, measure them and tabulate an empirical evaluation of risky labels. Perhaps something that took (say) six or seven threat vectors and used them to rank the riskiness of all of the applied-for strings based on known security and stability issues. Such a measurement system could produce a reasonable risk matrix for new gTLDs that could drive subsequent action. In such a matrix, a set of who, what, where, how, and motivations might be codified, collated, and measured so that some more semantically meaningful risk could be assessed.

Some folks may weigh the existence of certain risk factors higher or lower than others. But again, risk is a subjective notion, and people tend to get wound around the axle about relative scoring. My two cents is that it’s all about what the inputs are and what you’re worried about.

Whatever the set of attributes are that may be used to develop a risk matrix and calculate how risky a particularly string is, all of these things require that you have the ability to instrument the root server system (all the roots), perform some analysis to assess the risk to each individual string given the considered attributes, assess the potentially impacted parties, and take some action to forewarn them and enable them to mitigate potential impacts or disruptions should a delegation occur. It’s not rocket science, but it is work.

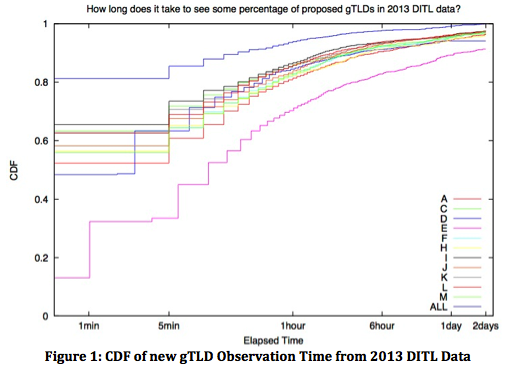

The aim of such assessments must be for accuracy – not just precision across a statistically sampled sliver of data. Figure 1 illustrates that if you look at a single root server letter, on average, you’ll see queries for 97 percent of proposed gTLDs strings within 48 hours (note: D- and E-root’s data is excluded from this calculation because they were not able to contribute a full data set). If you look at 11 root letters combined, it takes about 46 hours to see queries for 100 percent of the proposed gTLD strings (also note: DITL 2013 doesn’t have data from B or G root).

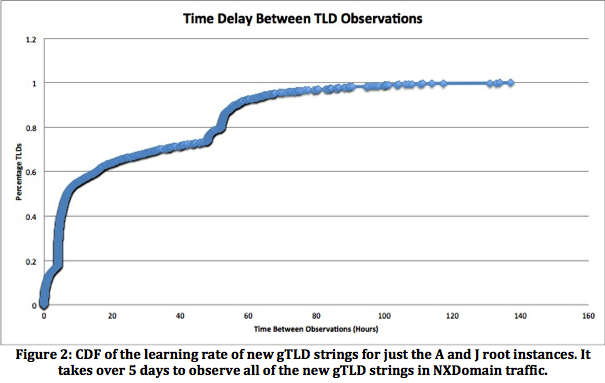

Figure 2 illustrates that when observing just at the A and J root servers operated by Verisign, it takes over five days to observe all the applied for strings, with a really long tail. This could be an artifact of many things, from the obvious very low query frequencies for some strings, to hard-coded or derived server affinities in query sources. The overarching point here is that accuracy across the system over a reasonably large window is key to identifying potentially impacted parties.

Regarding SAC045, much of the conclusion and thrust of the second set of recommendations focuses on impacts to the new gTLD applicant and corresponding registry operators. While that’s fine and dandy, the first set of recommendations is certainly more impactful to current internet users and enterprises, and proceeding (absent implementation of these recommendations) is unilaterally transferring risk to these consumers. PayPal highlighted transfer of risk in a letter to ICANN in March of this year. As provided in section 7 of SAC045, the key bit here is that “ICANN should coordinate with the community to identify principles that can serve as the basis for prohibiting the delegation of strings that may introduce security and stability problems at the root level of the DNS,” or at least put controls in place and “forewarn organizations” about the potential impact before those TLDs are delegated in the root zone. That’s responsible engineering, it’s certainly in the public interest, and it’s not hard, but it does require acceptance of the responsibility and express work on developing and effectuating an implementation plan.

ICANN has commissioned a study with Lyman Chapin and Interisle Consulting that should see light any day now. If you’re interested in a peek at what the study may look like, have a listen to the audio file here (note: the Q&A in the latter portion of the file is perhaps the most relevant) from the SSR discussion in Durban, South Africa, at the ICANN meeting last week. I suspect the transcript will be online shortly, and there are a few slides that were used here as well.

Finally, you should definitely be assessing what your applications, network and systems configurations use in your operating environments to determine what impacts to your own systems may occur.